As organizations continue to leverage artificial intelligence to fuel innovation and efficiency, it's crucial to stay vigilant against worsening any existing biases and inequalities within datasets.

As technology rapidly evolves, Artificial Intelligence (AI) has emerged as both a promising tool and a source of concern for business leaders in their digital transformation efforts. In fact, as we integrate AI into various aspects of our lives, from automating systems to hiring processes, it’s becoming increasingly clear that bias can seep into these systems and perpetuate inequalities while eroding trust.

The challenge therefore becomes: How can we balance automation with human oversight to ensure an "equitable" AI system deployment? How can it be accomplished while also addressing the inherent risks and promoting accountability and transparency in AI development?

|

More than one in three (36%) organizations surveyed have experienced challenges or a direct business impact due to AI bias in their algorithms.

|

What is AI Bias?

AI bias refers to preferences, prejudices, or inaccuracies present in artificial intelligence systems that lead to discriminatory or skewed results in the decision-making process. In the context of machine learning (a subset of AI), bias refers to the tendency of a model to consistently learn and reproduce patterns or decisions based on biased data training sets.

Balancing Automation and Human Oversight: The Critical Equation

AI-powered automation provides an unprecedented level of efficiency and scalability. However, if not properly monitored, as mentioned above it can inadvertently introduce or magnify biases inherent in historical data or algorithm designs. This in turn leads to discriminatory outcomes that disproportionally affect historically marginalized groups.

Therefore, it becomes essential to strike the right balance between automation and human oversight. Such oversight allows for critical evaluation, helping to minimize bias (or the risk of bias) and ensuring fairness in AI deployment.

How Bias Creeps into AI Systems

One of the primary concerns surrounding AI is its tendency to perpetuate human biases inherent in training data. After all, since humans create AI models, their biases can result in models that produce biased or skewed outputs that “reflect and perpetuate human biases within a society,”

For example, societal prejudices, often mirrored in historical data, can inadvertently reinforce biases within AI algorithms. This phenomenon is evident in areas such as biased hiring algorithms, which may discriminate against a specific group or demographics and therefore continue existing inequalities in employment opportunities. Furthermore, biased recommendation systems can exacerbate filter bubbles limiting individuals’ exposure to diverse perspectives.

Sources of Bias in AI

Where do the biases in AI originate? They can stem from various sources, including data collection methods, skewed data, the design of machine learning algorithms, and of course human decision-making processes. Some of these include:

Training Data Bias

Training data bias occurs when the data used to train the machine learning models already carries some kind of bias, potentially leading to skewed predictions or discriminatory outcomes. After all, the quality of the data that you get depends on the quality of the data received.

Algorithm Bias

Algorithm bias comes from biases in the design and implementation of machine learning algorithms, where prejudice in training data or in developer decision-making processes can inadvertently influence the machine learning model’s output.

Cognitive Bias

Cognitive bias is rooted in human thinking patterns based on our experiences. These biases can work their way into AI systems through decision-making processes such as data selection, model design, or even interpretation of results. This then can have unintended consequences such as perpetuating existing prejudices.

For example, a psychology study published in Scientific Reports demonstrated that bias instilled in a user by an AI model persisted even after their engagement with the AI had ended.

Legal and Ethical Implications

The general lack of accountability and transparency in AI systems can pose significant legal and ethical risks for companies. When AI systems make biased decisions, they can result in legal repercussions, damage to reputations, and a loss of customer trust. Furthermore, the lack of transparency in algorithms can hinder an individual’s understanding of how decisions are made, leading to lack of trust in AI-powered systems.

To address these concerns, it’s crucial to implement measures to ensure accountability and transparency throughout the AI development lifecycle.

|

Government Pressure is On

|

|

According to DataRobot's State of AI bias report, 81% of business leaders want government regulation to define and prevent AI bias.

|

Bias Mitigation Through Monitoring and Transparency

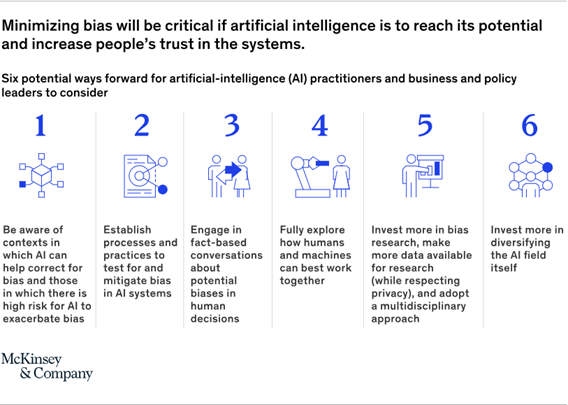

Rather than wait for something to occur, there are actions that you can take to help reduce bias in AI. This requires a proactive approach that prioritizes continuous monitoring, audits, and transparent decision-making processes.

Regular audits can help to identify and rectify any biases present in AI systems, helping to ensure fairness and equity. Furthermore, transparency regarding the data used, algorithms deployed, and decision-making criteria fosters trust among users and stakeholders.

By embracing transparency and accountability, organizations can uphold ethical standards and mitigate risks associated with biased AI systems.

Let’s look into this further.

Addressing Bias in AP Automation

Among several measures that organizations can implement to promote fairness, transparency, and accountability are:

1. Having Diverse, Representative Data

Ensures that the training data used in AP automation systems is diverse, representative, and free from biases. This entails sourcing the data sets from varied demographics and ensuring equitable representation across all categories.

2. Conduct Regular Audits and Monitoring

Conduct regular audits and monitor AP automation systems to help identify and rectify biases present in algorithms underlying data, and decision-making processes. Regular reviews can help maintain system integrity and mitigate the risk of human made algorithms perpetuating human biases.

3. Maintain Transparency and Justification

Provide transparency into how AP automation systems operate. This includes all the factors influencing automated decision-making processes and outlining the criteria used to prioritize payments. The ability and willingness to justify decisions enhances trust in AI systems and enables stakeholders to scrutinize the system’s operations for fairness.

4. Integrate Human Oversight

Integrate human oversight into AP automation processes to oversee and intervene in cases where bias may exist. This serves as a safeguard, helping to mitigate bias, ensure fair treatment of suppliers and uphold ethical standards.

A Case Study: Addressing AI Bias in AP Automation

Let’s explore a place where bias in AI might not be immediately apparent: the merger of accounting and technology in Accounts Payable (AP) automation. Within the realm of financial automation, AP automation systems have garnered significant attention for their potential to streamline processes and enhance efficiency. However, the integration of AI into AP automation introduces its own set of challenges concerning bias and fairness.

1. Increase of Pre-existing Biases

AP automation systems rely heavily on historical data to forecast trends, classify invoices, and streamline payment workflows. However, if this historical data already contains biases – such as favoritism towards certain vendors or discrimination towards certain suppliers – then the AI algorithms may unintentionally perpetuate these biases. For example, biased algorithms might prioritize larger suppliers over smaller, minority-owned businesses, thereby reinforcing existing economic inequalities.

2. Impact on Supplier Relationships

As previously mentioned, biased decision-making in AP automation systems can strain relationships with suppliers. If algorithms consistently favor certain vendors or unjustly delay payments to others based on factors unrelated to either performance or quality, it can erode trust and damage supplier relationships. This not only affects individual businesses but can also have broader economic repercussions, again particularly for small and minority-owned businesses.

3. Legal and Reputational Risks

In the finance sector, an area where adherence to compliance and transparency standards is of topmost importance, any biased results from AP automation systems pose significant legal and reputational risks. Discriminatory practices in payment processing can lead to legal disputes, regulatory penalties, and damage to the organization’s reputation. Furthermore, as stakeholders become increasingly aware of the potential for bias in AI systems, organizations that fail to address these concerns may face backlash from customers, investors, and regulatory authorities.

4. Ethical Considerations

From an ethical standpoint, ensuring fairness and equity in AI models and AP automation systems is crucial. Organizations have a responsibility to uphold ethical standards in their financial operations, including the use of AI, machine learning systems, and other modern technologies. Failing to address bias in AP automation not only undermines fairness principles but also perpetuates systemic inequalities in the financial arena.

In conclusion, it is important for human decision makers to remember that while AP automation systems offer significant potential to drive efficiency and cost savings, the incorporation of AI into human driven processes within these systems introduces complex challenges related to bias, fairness, and ethical considerations.

By proactively addressing the possibility of bias through measures such as diverse data collection, regular audits, transparent practices, and human oversight, organizations can uphold ethical standards and promote fairness in their financial operations. This not only mitigates legal and reputational risks but also fosters trust among suppliers and stakeholders. This in turn ultimately contributing to a more equitable and resilient ecosystem.

Summary

As we harness the power of AI to drive innovation and efficiency, it’s essential that organizations remain vigilant against the potential for bias. Striking a delicate balance between automation and human oversight, addressing biases in training data, and championing accountability and transparency are all critical steps in fostering fair and equitable AI deployment.

By championing ethical AI development practices, we can not only unlock the transformative capabilities of AI but also uphold the fundamental values of fairness, justice, and inclusivity in our increasingly digital world.